In this blog post, we explain process in operating system, including process control blocks, scheduling, context switching, and process creation.

Definition of Process

A process is the collection of code, memory, data and other resources. And process describes a series of events leading to achieving a specific objective. Basically, a process is a program under action.

For example: production process, management of a unit, service to a client etc.

parts of process in operating system

- Program Code (Text Section): This is the executable code of the process.

- Program Counter (PC): A register that Holds the address of the next instruction to be executed in the process.

- Stack: Stores temporary data such as function parameters, return addresses, and local variables. It grows and shrinks as functions are called and return.

- Heap: Dynamically allocated memory used during the process execution (for variables whose size isn’t known at compile time). It grows as needed to store objects and data whose size may change (e.g., dynamically created variables).

- Data Section: Contains global and static variables used by the process throughout its execution.

- Process State: Describes the current status of the process (e.g., new, ready, running, waiting, terminated).

Role of PCB

- Context Switching: When the CPU switches from one process to another, the current state of the running process is saved in its PCB. The saved state is restored when the process resumes execution.

- Process Tracking: The PCB tracks all the relevant data needed for process management, including memory usage, CPU state, and I/O interactions.

- Resource Management: The operating system uses the information in the PCB to allocate and manage system resources for the process.

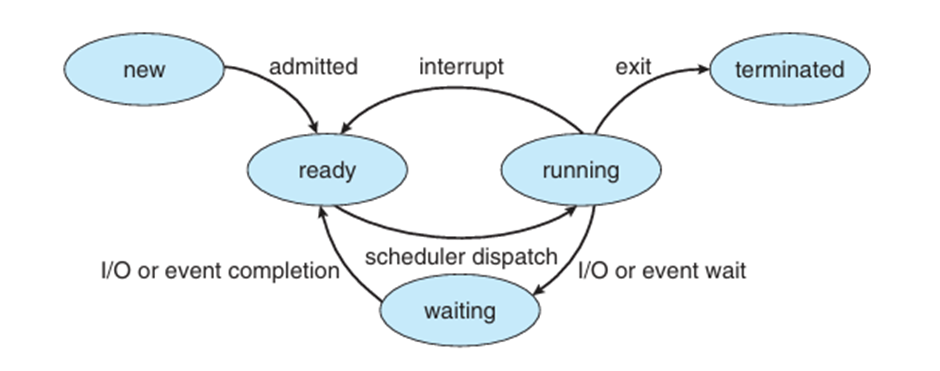

PROCESS STATES

A process state refers to the current status or condition of a process as it moves through its lifecycle in an operating system. Since processes are dynamic, they transition between different states based on their current activity, resource needs, or the system’s scheduling decisions.

Common Process States

- New:

- The process is being created. It has not yet started executing.

- Ready:

- The process is prepared to run and waiting to be assigned to the CPU. It has all the necessary resources except CPU time.

- Running:

- The process is currently being executed by the CPU. It is actively performing its tasks.

- Waiting (Blocked):

- The process is waiting for some event to occur (such as input/output completion or resource availability). It cannot proceed until the event happens.

- Terminated:

- The process has completed its execution or has been killed by the operating system or user. It is no longer active but may still have information retained for cleanup.

These states help the operating system manage and schedule processes efficiently, ensuring that system resources like CPU, memory, and I/O are used optimally. Processes transition between states as they execute, wait for resources, or finish their tasks.

PROCESS CONTROL BLOCK

The Process Control Block (PCB) also called task control block. It is a critical data structure in the operating system that contains essential information about a process. The operating system uses the PCB to manage processes and ensure smooth execution, context switching, and resource allocation.

Role of process control block

- Context Switching: When the CPU switches from one process to another, the current state of the running process is saved in its PCB. The saved state is restored when the process resumes execution.

- Process Tracking: The PCB tracks all the relevant data needed for process management, including memory usage, CPU state, and I/O interactions.

- Resource Management: The operating system uses the information in the PCB to allocate and manage system resources for the process.

In short, the PCB allows the operating system to manage multiple processes efficiently by storing and organizing all necessary information about each process.

Key Components of the Process Control Block (PCB):

- Process ID (PID):

- A unique identifier assigned to each process.

- Process State:

- Indicates the current state of the process (e.g., new, ready, running, waiting, terminated).

- Program Counter:

- Stores the address of the next instruction to be executed for the process.

- CPU Registers:

- Contains values for registers (e.g., accumulators, stack pointers, index registers). These values are saved and restored during context switching between processes.

- CPU-Scheduling Information:

- Includes process priority, scheduling queue pointers, and other scheduling parameters used by the CPU scheduler.

- Memory Management Information:

- Includes details like base and limit registers, page tables, or segment tables that control the process’s memory space.

- Accounting Information:

- Contains process-specific data like CPU usage, time limits, and process scheduling information (e.g., priority).

- I/O Status Information:

- Information about the I/O devices allocated to the process, including open files, list of I/O requests, and status of pending I/O operations.

- List of Open Files:

- Maintains a list of files the process has opened during its execution.

Process Scheduling

Process Scheduling is a core function of the operating system that determines the order in which processes are executed by the CPU. Since there are typically more processes than available CPUs, the operating system must efficiently manage which process gets to run and for how long. This ensures optimal CPU utilization, system responsiveness, and fair distribution of resources among processes

Key Concepts in Process Scheduling:

- CPU Scheduler:

- The CPU scheduler is responsible for selecting which process will be executed by the CPU from the ready queue (the list of processes that are ready to execute). It is also referred to as the short-term scheduler.

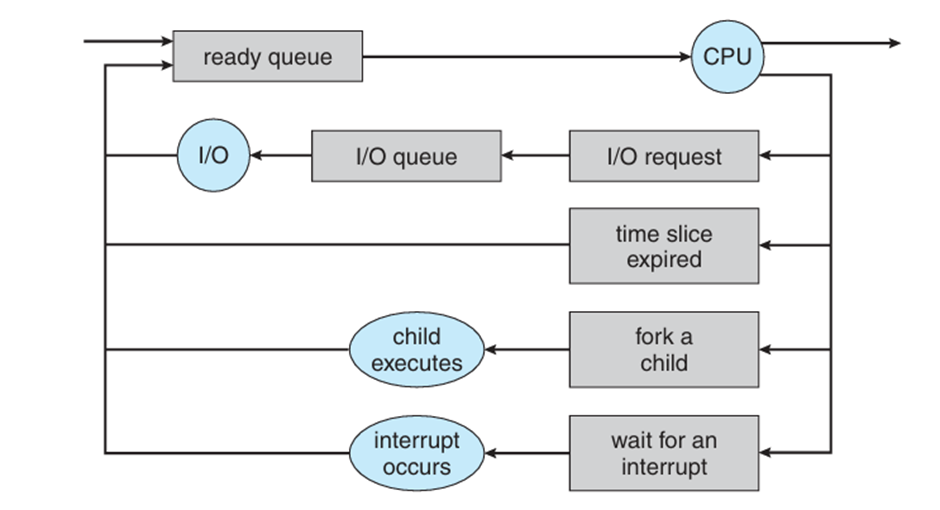

- Scheduling Queue:

- Processes are placed in different scheduling queues during their lifecycle:

- Job Queue: All processes in the system.

- Ready Queue: Processes that are ready and waiting to be executed.

- Waiting (Blocked) Queue: Processes waiting for an event like I/O completion.

- Processes are placed in different scheduling queues during their lifecycle:

- A device queue is used by the operating system to manage processes waiting for an I/O device. Each device has its own queue, where processes wait until the device becomes available for use.

- A queuing diagram illustrates the flow of processes between states, such as the ready queue (waiting for CPU time) and device queues (waiting for I/O devices). Processes move from the ready queue to the CPU for execution, and may enter a device queue if they need I/O operations, before returning to the ready queue or terminating after completion.

Dispatcher

The dispatcher is the component of the scheduler that gives control of the CPU to the process selected by the scheduler. It performs the context switching, switching the CPU from one process to another.

Once the process is allocated the CPU and is executing, one of several events could occur:

- The process could issue an I/O request and then be placed in an I/O queue.

- The process could create a new child process and wait for the child’s termination.

- The process could be removed forcibly from the CPU, as a result of an interrupt, and be put back in the ready queue.

Schedulers in Operating System

Schedulers are components of an operating system that determine which process will run on the CPU, when, and for how long. The primary goal of schedulers is to efficiently manage system resources and ensure fair process execution.

Purpose of Schedulers:

- Maximize CPU Utilization: By keeping the CPU busy with tasks.

- Improve Throughput: By increasing the number of processes completed in a given time.

- Minimize Turnaround and Response Time: Ensuring that processes are executed as efficiently as possible.

- Fairness: Distributing CPU time and system resources fairly among processes.

Types of Schedulers:

Long-Term Scheduler (Job Scheduler)

- Controls the admission of processes into the system.

- Decides which processes are loaded into the ready queue (main memory) from the job queue (secondary storage).

- It executes less frequently, as job admission is not a constant task. Its goal is to maintain a balanced mix of I/O-bound and CPU-bound processes.

Short-Term Scheduler (CPU Scheduler)

- Manages the selection of processes from the ready queue to execute on the CPU.

- Executes frequently, often several times per second, since processes constantly need to be scheduled.

- Ensures that the CPU is always busy, maximizing CPU utilization.

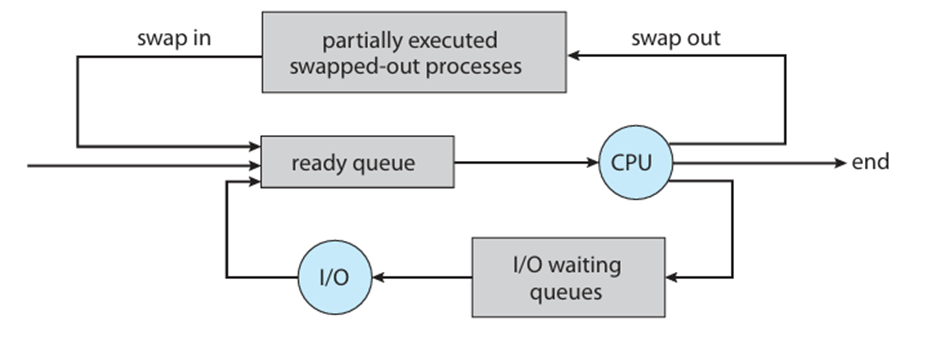

Medium-Term Scheduler

- Temporarily removes processes from main memory and moves them to secondary storage (this is called swapping) to free up memory and improve multitasking efficiency.

- It plays a role in suspending and resuming processes, helping with memory management and optimizing performance.

- If all processes are I/O bound, the ready queue will almost always be empty, and the short-term scheduler will have little to do. If all processes are CPU bound, the I/O waiting queue will almost always be empty, devices will go unused, and again the system will be unbalanced. The system with the best performance will thus have a combination of CPU-bound and I/O-bound processes.

I/O-Bound Processes and CPU-Bound

| Feature | I/O-Bound Processes | CPU-Bound Processes |

| Definition | Spend more time waiting for I/O operations than using the CPU. | Spend more time using the CPU for calculations and processing |

| Characteristics | Frequent I/O operations, short CPU bursts. | Long CPU bursts, minimal I/O |

| Examples | Web browsers, file transfer applications | Scientific simulations, video rendering. |

| Performance Impact | Optimizing I/O can improve overall system performance. | Optimizing CPU scheduling enhances performance |

SWAPPING

Swapping is a memory management technique used by operating systems to temporarily move processes between main memory (RAM) and secondary storage (disk) to manage the available memory efficiently.

The process is swapped out, and is later swapped in, by the medium-term scheduler. Swapping may be necessary to improve the process mix or because a change in memory requirements has overcommitted available memory, requiring memory to be freed up.

CONTEXT SWITCH

Context Switching is the process used by an operating system to switch the CPU from one process or thread to another. It allows multiple processes or threads to share the CPU effectively, enabling multitasking.

Key Points of context switch

- Process State: During context switching, the operating system saves the current process’s state, including CPU register values, program counter, and memory management information, in its Process Control Block (PCB).

- Scheduler Role: The scheduler determines which process to run next based on the scheduling algorithm.

- scheduling algorithm.

- Steps:

- The running process is interrupted.

- Its state is saved in the PCB.

- The scheduler selects the next process.

- The state of the selected process is loaded.

- Execution resumes with the new process.

- State Save: The process of capturing and storing the current execution context of a running process, including CPU register values, process control block (PCB) information, and memory management data, to enable a context switch.

- State Restore the process of loading the previously saved execution context of a process back into the CPU and relevant data structures so that the process can resume execution from where it left off.

Process creation

Process Creation is the mechanism by which a new process is generated in an operating system. This is a crucial aspect of multitasking environments, allowing multiple processes to run concurrently and efficiently utilize system resources.

Types of Process Creation

- Forking:

- A common method in Unix-like systems where a process (parent) creates a duplicate (child). The child process inherits the parent’s attributes, but they run independently.

- Executing New Programs:

- After forking, the child process can replace its memory space with a new program using system calls like exec(). This allows the child to execute a different program than its parent.

- Creating Threads:

- Some processes may create threads instead of separate processes. Threads share the same memory space, making communication between them faster and more efficient.

Steps in Process Creation

- Request for Creation:

- A process can be created through system calls like fork() in Unix/Linux or CreateProcess() in Windows. Typically, a parent process requests the creation of a child process.

- Allocation of Process Control Block (PCB):

- The operating system allocates a new Process Control Block (PCB) for the new process. The PCB contains essential information about the process, including its state, program counter, CPU registers, memory management information, and I/O status.

- Memory Allocation:

- The OS allocates memory for the new process, including space for the program code, data, stack, and heap. This may involve creating a new address space or sharing resources with the parent process, depending on the type of process creation.

- Initialization of Process State:

- The new process is initialized with a state, typically set to “ready” or “waiting.” This state indicates that the process is prepared to execute or waiting for resources to become available.

- Loading the Program:

- If the new process is meant to execute a different program (as in the case of an exec system call), the operating system loads the program’s executable code and sets up the execution environment.

- Adding to the Scheduling Queue:

- The new process is added to the ready queue, where it awaits CPU allocation from the scheduler. The scheduler manages which process to run based on scheduling algorithms.

PROCESS TERMINATION

Process Termination is the final stage in the lifecycle of a process when it completes its execution and is removed from the system. This process is essential for managing system resources effectively and ensuring the stability of the operating system.

Steps in Process Termination

- Completion of Execution:

- A process may terminate naturally after completing its assigned tasks or due to an explicit request from the user or the system.

- Termination Signal:

- Processes can also be terminated using system calls, such as exit() in Unix or TerminateProcess() in Windows. A termination signal can be sent by another process (e.g., a parent process) using signals like SIGTERM.

- Resource Deallocation:

- Upon termination, the operating system reclaims all resources allocated to the process, including memory, file handles, and I/O devices. This ensures that these resources can be reused by other processes.

- Updating Process Control Block (PCB):

- The PCB of the terminated process is updated to reflect its termination status. The operating system marks the process as “terminated” and removes it from the process table.

- Notifying Parent Process:

- If the terminated process has a parent, the operating system may notify the parent process of the termination. The parent can then retrieve the exit status of the child process using system calls like wait().

- Cleanup:

- Any remaining data structures related to the terminated process are cleaned up by the operating system, ensuring that no resources are wasted.

Process termination is a crucial aspect of process management in operating systems. It involves the completion of a process’s execution, deallocation of resources, updating of the process control block, and notification to parent processes. Effective process termination helps maintain system stability and resource efficiency.

Good work!